Home

Prepare for law school success with Zero-L

For individual learners who would like to apply to the upcoming cohort of Zero-L starting on Tuesday, February 17th, 2026, applications are due by the deadline of Friday, February 13th, 2026, at 5PM EST.

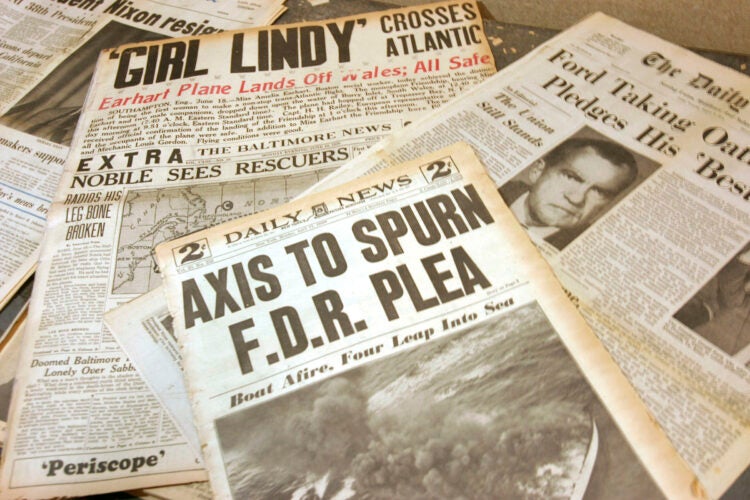

Frozen in Time

Professor Jill Lepore’s new book, a history of the U.S. Constitution, explores the consequences of its effective unamendability

Featured Areas of Interest

- Administrative and Regulatory Law

- American Indian Law

- Animal Law

- Antitrust

- Arts, Entertainment, and Sports Law

- Bankruptcy and Commercial Law

- Children and Family Law

- Civil Litigation

- Civil Rights

- Conflict of Laws

- Constitutional Law

- Criminal Law and Procedure

- Contracts

- Comparative Law

- Corporate and Transactional Law

- Courts, Jurisdiction, and Procedure

- Disability Law

- Education Law

- Election Law and Democracy

- Employment and Labor Law

- Environmental Law and Policy

- Finance, Accounting, and Strategy

- Financial and Monetary Institutions

- Gender and the Law

- Health, Food, and Drug Law

- Human Rights

- Immigration Law

- Intellectual Property

- International Law

- Jurisprudence and Legal Theory

- Law and Economics

- Law and Philosophy

- Law and Political Economy

- Law and Religion

- Leadership

- Legal History

- Legal Profession and Ethics

- LGBTQ+

- National Security Law

- Negotiation and Alternative Dispute Resolution

- Poverty Law and Economic Justice

- Private Law

- Property

- Torts

- Race and the Law

- State and Local Government

- Tax Law and Policy

- Technology Law and Policy

- Trusts, Estates, and Fiduciary Law

- More

500+ Courses & Seminars

47 Clinics & Student Practice Orgs

88 Student Organizations

Limitless Possibilities