Breaking Bread, Building Bridges: Dialogue Session 2

Whether you identify as a progressive leftist or a conservative Christian or anything in between, join Breaking Bread, Building Bridges—a student-led initiative funded by the Dean’s Community Connection Grant and the President’s Building Bridges Fund—for the second of six biweekly dialogue sessions to engage in a thoughtful, guided discussion on pressing moral, political, and social […]

From Law Firm to Global Impact

HuB Fireside Chat with Foley Hoag Partner, Gare A. Smith! The Human Rights and Business Students Association (HuB) is excited to host a fireside lunch event with Gare A. Smith, a leading practitioner in the human rights and business space. This lunch event will focus on Mr. Smith’s career path, his work on Human Rights Impact […]

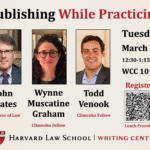

Publishing While Practicing

Want to publish a paper? Want to keep the door open for a career in academia? Join the Harvard Law School Writing Center on March 10th from 12:15 to 1:15 pm for Publishing While Practicing. Hear from law school faculty who have published legal scholarship while working at the DOJ (Todd Venook), the MacArthur Justice […]

Ring the Bell: Discussion of Derrick Bell’s “A Law Professor’s Protest”

Are you interested in critical race theory, literature and the law, and the role race plays in shaping dynamics at Harvard specifically? Join the Bell Collective for Critical Race Theory for a reading and discussion of Derrick Bell’s short story “A Law Professor’s Protest,” a work of speculative fiction about a bombing at Harvard during […]

HLS Beyond and BKC present: AI Governance and Human Alignment

In this second session of the TechReg in AI series w/ Alan Raul (see April 9th) we address the issue of how Frontier AI companies assure human control and safety. AI is a potentially hugely transformative technology that is developing substantially outside the government’s direct control. Since under the Administration’s current AI framework major tech companies will be largely responsible for directing and controlling the progress and governance of frontier AI, we survey how these corporate entities have set up their governance structures, instituted compliance measures (legal conformity and safety assessments, risk management frameworks), built in technical measures (evaluations, red-teaming, monitoring), and established organizational measures (risk committees, responsible scaling policies, incident response).

Interfaith Engagement at HLS

The Office of Equal Opportunity (OEO) is bringing staff and students together to hear from Rabbi Getzel Davis and Abby McElroy, who lead the Interfaith Engagement Initiative in the Office of the President. Attendees will hear from Getzel and Abby about how to best engage across faiths and create meaningful opportunities for constructive interfaith dialogue […]

Faith & Veritas 2026

Join a university-wide gathering of Harvard’s Christian alumni, students, faculty, and staff to forge new friendships and engage with thought leaders exploring the role and impact of the Christian faith in addressing contemporary challenges. Space is limited and registration is required. Register here. More information available on the event website.

HLS Beyond and BKC present: Evidence-Based AI Policy

In this third and final session of the TechReg in AI series with Professor Alan Raul, we consider what constitutes an “AI incident” for policy and governance purposes. Who is monitoring and reporting them? How does the concept account for foreseeable harms, near misses, and distinctions between systems performing as intended versus those that are malfunctioning, maliciously compromised, or acting in novel or unexpected manners? As we dig into today’s incident-monitoring ecosystem, we’ll discuss relevant challenges such as underreporting, selection bias, confidentiality, reproducibility and how to translate scattered, anecdotal events into meaningful evidence for risk management and harm prevention.