Automating Content Policy

October 22, 2025

12:30 pm - 1:30 pm

This event has passed

Lewis Hall, 5th floor at the Berkman Klein Center's Multi-Purpose Room 515

1557 Massachusetts Avenue

Cambridge, MA 02138

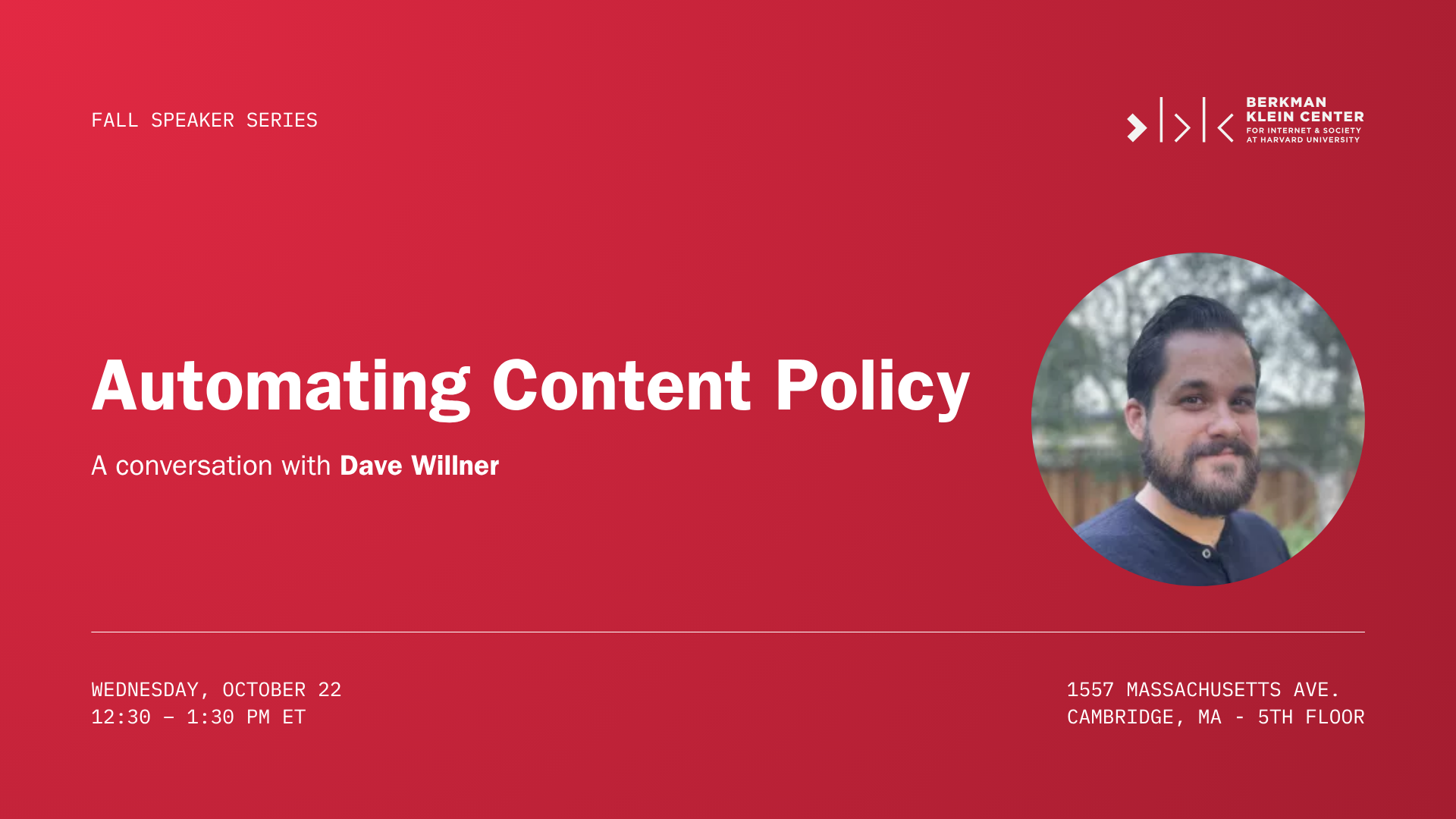

AI is no longer just moderating individual posts — it is learning how to interpret and enforce policy itself. Dave Willner — who has led trust and safety teams at Facebook, Airbnb, and OpenAI — joins journalist Meg Marco for a conversation about the shifting terrain of moderation in the age of generative AI.

From the tipping points of past technologies, like the cell phone, to today’s new “policy-rewriting machines” that can run millions of classifications in an hour, Willner and Marco will explore how automation is changing the scale, speed, and stakes of online governance.

New systems can ingest a company’s policy documents, apply them to vast datasets, and make millions of classifications in hours. In doing so, they both replicate the human task of drawing boundaries — deciding what falls inside or outside the rules — and extend that task far beyond traditional concerns like hate speech or misinformation.

Speakers

Dave Willner

Dave Willner is a Co-Founder at Zentropi, the company behind CoPE, a best-in-class small language model capable of accurate and steerable content classification.

He was previously a non-resident fellow in Stanford Cyber Policy Center, and worked in industry as Head of Trust & Safety at OpenAI, as Head of Community Policy at Airbnb, and as Head of Content Policy at Meta (formerly Facebook).

Meg Marco

Meg is the Senior Director of the Applied Social Media Lab, focusing on building public interest technology that helps make information available and understandable to researchers, journalists, civil society organizations and the general public. She has held senior editorial positions at WIRED, ProPublica and The Wall Street Journal.